The gap isn't intelligence. It's architecture. Here's what 1.7 billion workflows taught us about production multi agents.

The Production Reality Gap

The agentic AI industry has an architecture problem, but most people are solving the wrong thing.

Everyone is building agents. Smarter prompts, better models, yet many of these projects never make it to production. The agents are clever enough, but the architecture falls short.

Most implementations are either too rigid (heavy scaffolding that can't adapt) or too loose (unbounded agency). After watching 1.7 billion agentic workflows run this year across enterprise customers, the pattern is clear: the gap isn't intelligence. It's architecture.

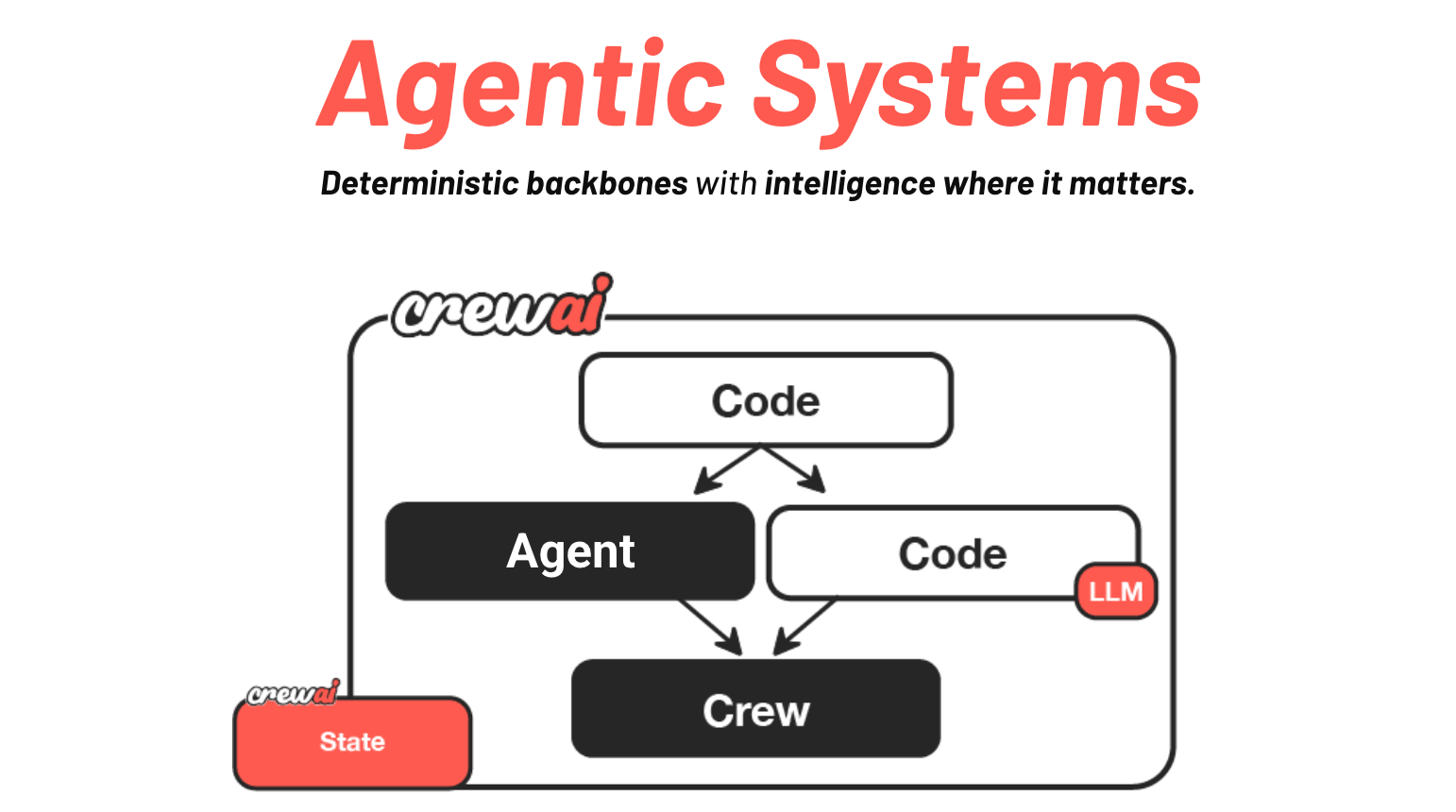

Here's what’s actually working: a deterministic backbone with intelligence where it matters.

We've processed agentic workflows for enterprise companies across healthcare, CPGs, finance, logistics, and professional services. DocuSign increased email engagement rates while cutting sales research time from hours to minutes using this architecture. More on that below, it's a killer example.

And a interesting thing about this pattern is that it shows up organically across customers. I'm calling it Agentic Systems.

There is a race to the bottom to make building AI Agents super easy and straightforward, so many tools and yet most implementations are not ready for production.

They optimize for build time or demo time, but the fundamental gaps for deployment and the confidence it requires remain.

The Current State: Where the Industry Got Stuck

The agentic AI landscape has been evolving fast. Two years ago, the question was "Are agents even real?". Today, everyone is building them, all sorts of vendors are rebranding their automation tools as "intelligent agents".

Here's what consistently fails though:

Prompt chains passing as agents. LLMs + tools in a loop. Call an LLM, parse output, chain another call, hit an API, format response. Add "agentic" to the docs and ship it. These are scripted API calls with an LLM in the middle. And this is great if that's all your use case needs, but they collapse when you actually need agency, and can drastically increase in complexity if you keep extending it to support it later.

DAGs / Graph over maintainability. Nodes, edges, state machines, all visually impressive, and even conceptually sound. But production seems to become debugging the graph instead of solving the business problem as it scales. As one community member put it: "[Graph-based frameworks] give you flexibility over state, but once workflows scale, the debugging pain outweighs the benefits." When breaking changes ship weekly, you're maintaining the framework, not your business logic.

Autonomous agents without architectural constraints. Give it tools, set a goal, let it run. This is why we see predictions that sound like “X% of agentic AI deployments get canceled”. Unbounded agency without architecture doesn't give the confidence required for enterprises to deploy critical workflows.

The pattern seems to be that everyone is optimizing for agent intelligence, but almost no one is building for system architecture. And this is a major gap getting in the way of marrying the ability to build fast while instilling confidence, without sacrificing maintainability at scale.

The winners won't have the smartest agents, instead they'll have the architecture that makes agents trustworthy, deployable, and governable.

And this organic pattern around Agentic Systems seems to address a lot of that.

What Are Agentic Systems?

Start with what production actually requires, not what looks impressive in a demo, but what you need when real workflows and real money are on the line.

You need systems that at minimum are observable (trace every decision), governable (enforce policies), cost-controlled (predictable spend at scale), auditable (satisfy regulators), and maintainable (don't become nightmares as you scale).

Agentic Systems are architected in a way that gives you building blocks that compose, instead of abstractions that constrain, and they achieve that by bringing together two components:

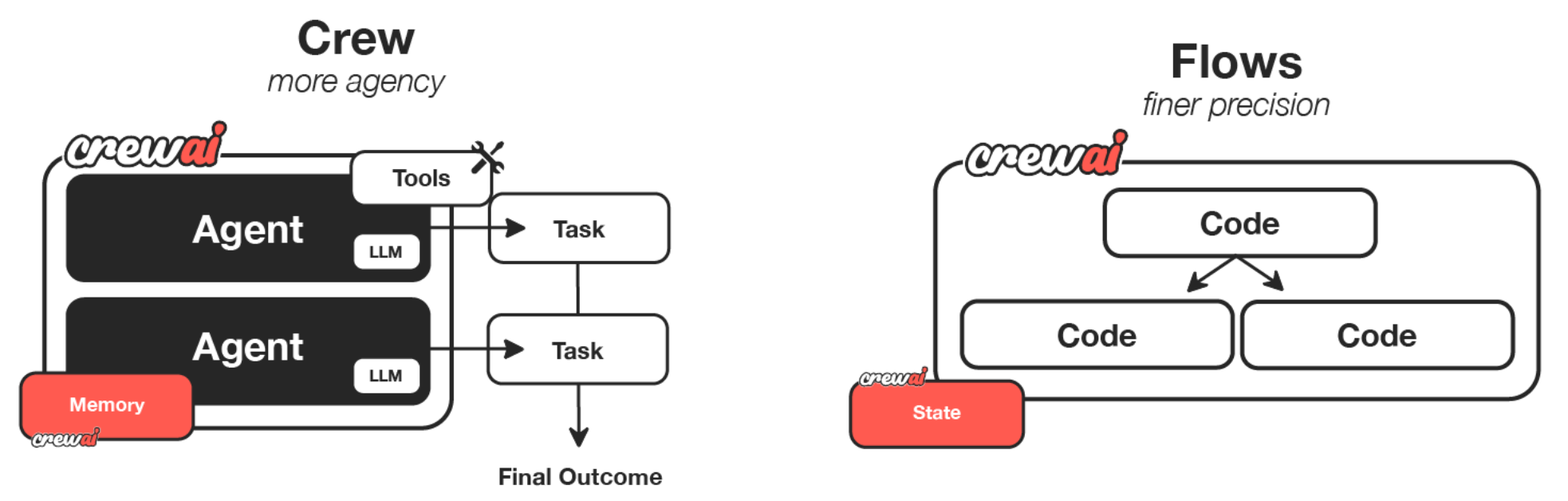

A deterministic backbone that owns the structure. We call these Flows, they define which steps execute, in what order, with what guardrails. Flows are a very thin code layer with almost no abstractions, just highly flexible decorators, state management, and other essential primitives to give you programmatic control. They handle the unglamorous but critical parts: conditional branching, state management across steps, whatever custom logic your business requires. You write regular code, not framework configuration. Same inputs, same execution path, predictable by design, all event-driven, modifiable at runtime.

Intelligence deployed where it matters. This is a spectrum: on the low agency side it could be a single ad-hoc LLM call or single agent. On the high agency side: an entire Crew with multiple agents collaborating. They're invoked intentionally by the Flow at specific steps. They operate within scoped boundaries defined by the Flow, and control always returns to the backbone when they're done. You get the adaptability and reasoning of AI without the unpredictability that kills deployments.

Structure where you need it, intelligence where it matters.

This architecture is what companies like DocuSign, KT, Konecta, the US Department of Defense, Ab Inbev and many more are using in production to deliver business outcomes at scale.

Building Agentic Systems

The architecture is simple, but you need to know where to draw the lines.

If a step doesn't need intelligence, data validation, formatting, or calling an API with known parameters, it's just code in your Flow. Don't overcomplicate it with agents, the same engineering principles that brought us here are still valid and KISS is a big one.

If you need a single completion, or maybe a simple function call, things like "summarize this document," "extract these fields," or "classify this input", a single LLM call is enough, no need for agency overhead, no complexity.

If you need one intelligent task with tool use, for things like "research this company and pull financial data" or "verify these credentials across multiple sources" then a single agent might be enough for you, no reason to jump into a full multi agent abstraction just yet, it can reason, use tools, and handle the task.

But as you get into more complex reasoning, collaboration, or multi-step intelligence, things like "conducting due diligence across legal, financial, and operational dimensions", "research a topic and write a comprehensive report" then a Crew with multi agents on it compounds a lot of value, multiple agents working together, each with defined roles, possibly delegating and validating each other’s work, learning from its own executions, traceable, observable, with PII filter and such, including the native ability to take actions, reason plans and self-heal in case things go south.

What breaks when you get the architecture wrong:

Putting everything in agents when some of it should be code. You can't debug it appropriately, costs spiral, behavior is somewhat unpredictable for that step and every change requires "testing the agent" instead of changing logic.

Squeezing too much into one agent. Context windows blow up, too many tools confuse it, and hallucinations increase, this is the ceiling people hit when they go for single vs multi-agent patterns.

Building complex workflows without architecture. When you have multi-step processes with real branching and state management, stringing together agents or LLM calls isn't enough. You need a backbone.

Not testing different models. What works on one model fails on another. Your architecture should let you swap models without rewriting your system.

The winning pattern: A deterministic backbone dictating part of the core logic (Flow) then certain individual steps leveraging different levels of agents from an ad-hoc LLM call, a single agent to a complete Crew.

How DocuSign Built It

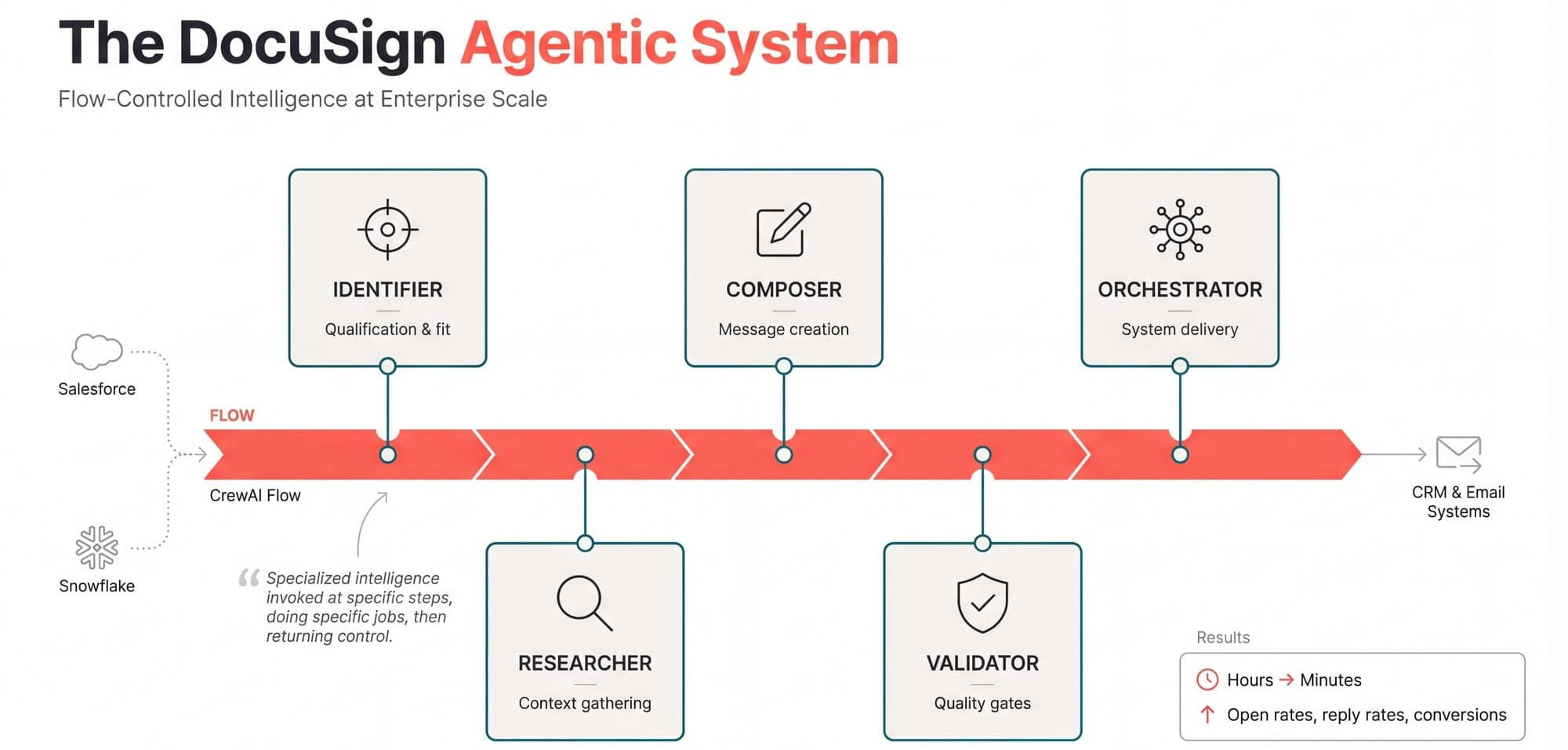

DocuSign increased email open rates, reply rates, and conversion rates while cutting sales research time from hours to minutes. Here's how they built it.

DocuSign—a publicly-traded company with billions in revenue whose platform is used by 90% of Fortune 500 companies—faced a fundamental question: how do you personalize customer engagement at scale without turning every sales rep into a full-time researcher?

At our last CrewAI Signal Conference, their lead AI architect Vamsi and lead data engineer Dhruv shared how they built their sales outreach system as an Agentic System.

Scale is top of mind for them given their business penetration, and sales reps could not spend hours researching customers, reading company reports, checking latest news and positioning products before they could draft an email.

DocuSign was quick to adopt CrewAI Flows, here’s part of the architecture Vamsi and Dhruv helped spearhead:

The Flow implements the core deterministic backbone and defer to a set of specialized agents working together, between a set of agents, these agents are able to tap into data from Salesforce, Snowflake and apply business rules to decide on qualification and fits.

Through hallucination guardrails (a CrewAI Enterprise feature) they were able to react in run time to certain conditions and implement a quality verification process that ensures a high bar on the final results.

The Flow manages state, branching, and validation throughout. Each agent gets context from previous steps. The Researcher feeds the Composer. The Composer feeds the Validator. But the core control always returns to the Flow, when something needs manual review, the Flow routes it appropriately, when an agent fails, a Crew handles it gracefully.

They AB tested this rigorously. Same customers, same time period, some got crew generated outreach, some got rep-generated, and the agents matched or beat human reps on engagement metrics while dramatically cutting turnaround time. What took reps hours took the system minutes and email open rates increased, reply rates increased and conversion rates improved.

What makes this work:

The agents are reusable, and so are the tools (through their internal agent and tools repositories in CrewAI Enterprise Platform). DocuSign is now using the same agent architecture for different use cases across the organization, not just outreach. That's the power of separating structure (Flow) from intelligence (Crews).

The Flow enforces business logic that can't be negotiated, the agents don't decide whether to do these steps. They provide intelligence within those steps.

The key insight from Vamsi and Dhruv: Each agent operates within clear boundaries defined by the Flow, they're not free-roaming, but instead they're specialized intelligence invoked at specific steps, doing specific jobs, then returning control.

Why This Matters

Different parts of your system will evolve at different rates:

Stable pieces (well-understood processes with clear rules): May never need much agency. Keep them simple, fast, and cheap.

Experimental parts (new use cases, uncertain requirements): Start with more agency to explore what works, solidify into structured Flows as patterns emerge.

Compliance-critical steps (regulatory requirements, audit trails): Structure stays constant even as models improve, you're not betting compliance on model behavior.

Cost-sensitive volumes: Dial agency up when costs drop, dial back when they spike. Same architecture, different settings.

The teams that win in the long term aren't the ones chasing the latest model capabilities or the flashiest framework features. They're the ones building systems they can still maintain, debug, and evolve quickly, while keeping things sane two years from now, when half the team has turned over, and requirements have changed in ways nobody predicted.

The architectural decisions we're talking about have direct business impact.

Predictable costs. When you control where agency lives, you control spending. Dial it up for high-value workflows, down for routine ones. Budget AI like you budget infrastructure.

Faster iteration. Maintainable systems mean shipping new use cases in weeks, not quarters. DocuSign went from one use case to multiple across the organization because the architecture is reusable.

Reduced risk. Observable, governable systems mean you can actually deploy in regulated industries. Healthcare, finance, legal, places where "it usually works" isn't good enough.

Competitive moat. When your systems get smarter with each execution, competitors can't just copy your prompts and catch up. The architecture compounds advantages over time.

This isn't just better engineering. It's better business.

This Is Already Happening

We're not talking about some future vision, agentic systems are running in production right now, Fortune 500 healthcare companies are processing credentialing workflows. Financial services firms conduct thousands of risk assessments each month. Logistics operations that can't afford downtime. These aren't demos, they're systems people bet their jobs on.

The pattern? Same architecture every time. Flows manage the structure and compliance. Crews providing the intelligence where it matters. Memory makes systems more intelligent with each run. And when something breaks, engineers can actually debug it because the architecture doesn't get in their way.

If you're ready to build systems that actually work in production, the architecture is straightforward. Start with reliability. Design for maintainability. Let intelligence live where it matters, structure where it's needed.

There are two ways companies approach agents.

Some treat it as an engineering project—evaluating primitives, comparing developer tools, measuring success by traces and metrics.

Others see it as transformational—measuring success by use cases in production, business outcomes delivered, systems that work at scale.

The future doesn't belong to the teams with the smartest agents. It belongs to the teams with the strongest backbones.

Comments ()